Banner artwork by Marish / Shutterstock.com

Behind the scenes of AI’s brilliance lies an energy-intensive process with a staggering carbon footprint. According to Earth.org, the energy needed to train increasingly complex datasets and models is “enormous” and “directly affects greenhouse gas emissions, aggravating climate change.”

The speed and reach of AI was the topic of 2023, and last year I wrote about the intersection of legal operations technology implementation and ethical tech deployment. But AI is more than simply large language models and datasets, it is increasingly integrated in the “fun” aspects of our daily lives — from avatars to Ray Bans, the underlying AI has a large carbon footprint and raises ethical questions about our privacy.

I spoke with Graeme Grovum, head of legal technology and client services at Allens, about the ethics of AI in some of the more “fun” aspects of our lives.

What do you think when you hear AI and ethics?

“I don't think that there is any bright line distinction that we should draw between Generative AI and other types of AI or advanced technologies of the last decade when it comes to ethics, but there are new ways that AI will be used unethically,” he shares. “Our collectively enthusiastic uptake of social media over the last 15 years has created an enormous data 'footprint' for most of us. And, as blue_beetle astutely observed in 2010, ‘If you are not paying for it, you're not the customer; you're the product being sold.’”

When considering basic ethical issues, Grovum identified the following areas.

Persuasive AI

The challenges of using and misusing of our personal data is most evident through social media companies. Grovum agrees, saying, “It seems a long time ago, and, unfortunately, much has happened since that has edged it from our collective consciousness, but the Cambridge Analytica scandal of 2018 was a canary in the coal mine for the outcomes that can be engineered when personal data is mined at sufficient scale.”

The challenges of using and misusing of our personal data is most evident through social medial companies.

Grovum points to psychologist Sander van der Linden’s book, Foolproof: Why Misinformation Infects Our Minds and How to Build Immunity, (also summarized in his article ), which highlighted the success of political microtargeting.”What is so concerning about this result is that, as opposed to regular political ads, people cannot reasonably defend themselves against persuasion attacks when they don’t even know that they are being targeted.”

The accuracy of algorithms

Grovum also points to David Stillwell and Michael Kosinski’s paper showing the accuracy of computer-based personality tests. Grovum says, “I was fascinated by the findings that, using only 10 likes, the computer algorithm was more accurate than a work colleague about someone’s personality. The paper went on to discuss how the algorithm only needed 70 likes to outperform the prediction of friends, 150 likes to outperform family, and about 300 likes to outperform a spouse.”

The mosaic theory

The “‘mosaic theory’” — that individually harmless pieces of information, when combined with other pieces, can generate a composite — or a mosaic — that can damage Australia’s national security or international relations. “In 2023, the Australian Federal Policy was called out for (possibly) misusing AI on privately collected data from vendor Auror.

This comes after being ordered to strengthen its privacy governance after getting in trouble in 2021 with its use of Clearview AI's facial recognition software.”

The end of personal privacy

“We appear to be living in a post-privacy world for the most part, brought about by the ease by which data can be collected by anyone, and the seemingly trivial trade-off between relinquishing personal information and earning a reward. This really is behavioral psychology at its most basic, isn't it?! I still wear the socks that I traded my personal information for at an AWS conference a few years ago,” says Grovum.

We appear to be living in a post-privacy world for the most part, brought about by the ease by which data can be collected by anyone, and the seemingly trivial trade-off between relinquishing personal information and earning a reward.

“It's interesting to think about why we do this,” he continues, “I've noticed that I have a bias towards entrusting my personal data to the biggest corporations (where perception of risk is minimal, in part due to the feeling that I have an anonymity of sorts as one of many millions of users) over giving my personal details to an actual person (the local plumber, for example). Why is this? Perhaps we (rightly) expect that the large company will have more and better security systems to safeguard our data, while the plumber might leave a photocopy of my credit card information laying around for someone to misuse.”

Grovum considers the importance of companies collecting biometric data “without our (knowing) consent and the use of that data for self-interested purposes (including seemingly punitive ones). Sara Migliorini argues this amounts to “biometric harm,” a new kind of harm that should be defined as 'arising from the use of biometrics to identify and classify people without a valid legal justification.”

What are the biggest recent changes in AI that have added to the ethics argument?

Grovum thinks that the introduction of Generative AI has “added an entirely new wrinkle to the idea of 'the ethical use of AI' — I've heard it said, and I believe it to be true, that in the future we will regard 2022 as the last year when we could reliably assume that content (any content) was human-generated. It might be cold comfort, in a world already plagued by disinformation campaigns, to 'appreciate' the fact that disinformation was at least created by human(s) until 2023.

We are, instead, faced with the prospect of an explosion of content that is automatically generated.

This content might be true, might be largely true, might only be partially true, or might not be true at all.

Grovum think this leads to “the requirement for a heightened awareness of possible disinformation and vigilance against its use. We've all seen the Avianca Airlines citation debacle from 2023, and now are being treated to the same absurdity from former Trump fixer Michael Cohen. Closer to home, a submission to the senate inquiry into the big four accounting firms by a group of academics was seriously undermined by the last-minute inclusion of numerous false allegations of wrongdoing concocted by Google Bard when used as a research tool by the lead academic.

Another way that generative AI is possibly catching out some legal practitioners is in the context of keeping client information confidential. Using non-obfuscated client data in ChatGPT sends that data back to Open AI's servers and adds the conversation to its overall model. This has broader ramifications than just on a client-lawyer basis as well. In April 2023 Samsung banned the use of ChatGPT by its employees after it came to light that sensitive code had been uploaded to OpenAI's servers through the chatbot.

As we get further into the world of generated content, it's easy to imagine disinformation sites that mimic real sites and that contain huge amounts of AI-generated content. The constraint of human in the loop creation has been removed; it can all be automated – where does that leave us when we get a citation, and the link is real, and the information at the end of the link exists, but the entire site (or report, or presentation, etc) is AI-generated?”

ESG’s intersection with AI is becoming more important. What are your thoughts on the environmental impact of AI?

“You might be familiar with the idea of something being 'computationally expensive'. That's the idea that, for a given input size, some processes require a relatively large number of steps to complete. This translates, at the local level, into your computer hanging when you are attempting to open a large file; your computer fan goes into overdrive as your computer's RAM gets taken over by the file and you sit there watching a little spinning progress indicator.

But real things are happening! You can hear the fan! You can feel the heat being generated! It's pretty easy to understand, on your local PC, that computationally expensive also means resource intensive — it's literally making noise and heating up in front of you. Well, it turns out that Generative AI is, as a whole, quite computationally expensive. But, because we live in a world of distributed computing, all of this activity happens on cloud infrastructure that gracefully scales to absorb demand and is nowhere near us. We can't hear the hum of increased fan activity when we ask ChatGPT a question, and we can't feel the heat of an over-used CPU as the response is being generated. ”

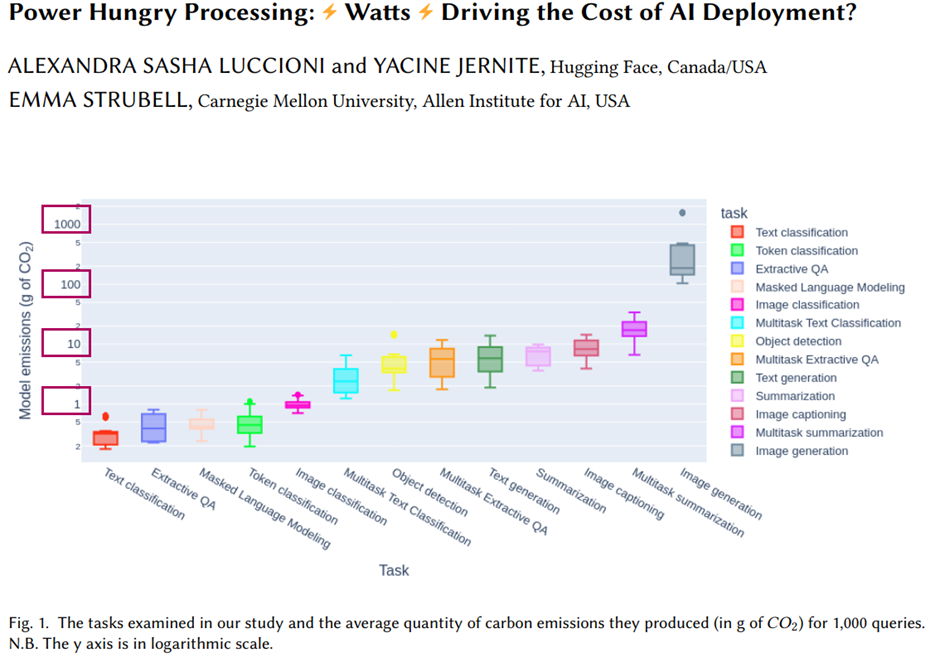

“The distributed nature of cloud computing also makes it hard to make meaningful comparisons between the energy consumption of different types of generative AI. But, the November 2023 paper 'Power Hungry Processing: Watts Driving the Cost of AI Deployment? ' does exactly that, finding:

- Generative tasks are more energy- and carbon-intensive compared to discriminative tasks;

- Tasks involving images are more energy- and carbon-intensive compared to those involving text alone;

- Training remains orders of magnitude more energy- and carbon- intensive than inference; and

- Using multi-purpose models for discriminative tasks is more energy-intensive compared to task-specific models for these same tasks.

The above chart shows what looks like a “gentle increase” to be increasing by a multiple of 10 (see the boxes I marked).

The authors summarized the chart by comparing it to mobile phone charging. "For comparison, charging the average smartphone requires 0.012 kWh of energy, which means that the most efficient text generation model uses as much energy as 16 percent of a full smartphone charge for 1,000 inferences, whereas the least efficient image generation model uses as much energy as 950 smartphone charges (11.49 kWh), or nearly 1 charge per image generation although there is also a large variation between image generation models, depending on the size of image that they generate."

Apart from the environment, what else do you see as ethical issues for AI?

Grovum thinks the “interesting thing about bias is that it is contextual to the period that you're looking at. With the absolute best of intentions, we will get terribly biased outcomes if we collect all local, state, and federal court decisions from 1950 to today, because so much of the language used in any document from another time is going to be laden with value judgements that are “bias-free” when written, but terribly outdated when viewed from a period with a different perspective. We are perhaps the most enlightened we've ever been, but also — hopefully — the least enlightened we will be going forward.

How do you manage the evolving public consciousness of morality when constructing data sets that will inform AI going forward and, might even have stewardship over aspects of our lives that we manage ourselves, let government manage, or ignore as too hard right now? While it’s frightening to contemplate the ramifications of getting it wrong, it's more frightening not to think this.”

What are your thoughts on the privacy and ethical issues arising from smart glasses, which can record what the wearer sees?

For Grovum, “this ties to the earlier point that Sara Migliorini makes about biometric harm. We need to consider redefining boundaries of personal freedom that are defined by others rights rather than by our own personal rights. For example, does the GDPR right to be forgotten need to extend to the right not to be recognized in the first place?

This would trump the right to record in a public space which seems a bit harsh, but it raises an interesting question: Given that we now live in a society in which public recordings can be tied back to individuals who are recorded in that public space — without those people’s consent — using biometric data, should the right to anonymity in a public place supersede the right to freedom of expression (including the right to capture others' personal data in a public place)?”

Considering ethics while having fun

Our society is grappling with privacy issues related to customer data sales and the use of biometric data. As technology, including AI, becomes increasingly integrated into everyday life through seemingly fun applications like avatars and smart glasses, we must ponder the ethical implications of each new technology — from its environmental impact to concerns about its development.

As in-house counsel, it's not only important to consider these issues personally but also for our organizations. We should initiate discussions in forums and contemplate their inclusion in AI governance policies to guide our organizations. Although we may not have all the answers now, it's crucial to ask the right questions.