Banner artwork by Delook Creative / Shutterstock.com

Not all legal risks are equal, especially those associated with generative AI. After all, most generative AI tools are not built for the legal industry. They don’t inherently consider legal contexts or comprehend legal language nuances. Those responsibilities fall on lawyers as they converse with AI tools, guide AI system workflows, and evaluate AI-generated output.

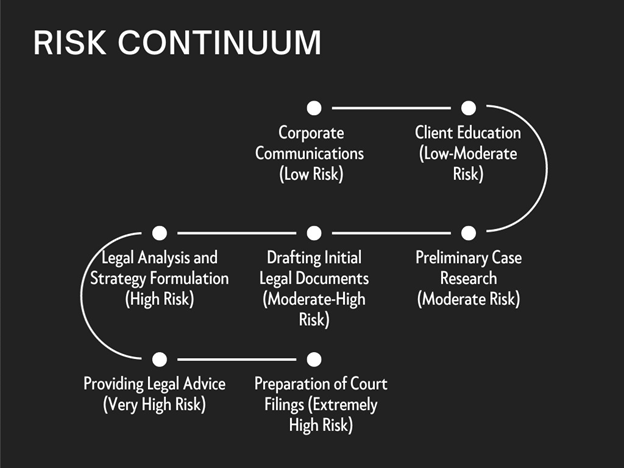

Beyond talking to a vendor or learning more about the public tool, the legal risk continuum below offers a helpful framework to assess and manage the potential risks and benefits at various stages of AI implementation.

It helps you evaluate risks related to data privacy, security, bias, professional responsibility, and other considerations, guiding you on when and how to use AI responsibly.

Rate activities from low to high risk.

As the examples in the risk continuum show, levels of risk associated with using AI rise depending on the potential consequences related to legal work you perform.

1. Low-to-moderate risk: corporate communications and education

Generative AI tools effortlessly produce natural-sounding text GCs can use in client, employee, board, and business partner communications, informational updates, and educational content. These activities are typically low-to-moderate risk.

However, in this and every stage on the risk continuum, you must diligently review and verify the accuracy of AI’s outputs. If AI tools process company data to tailor your content, you must address data privacy concerns and ensure compliance with data protection laws. Also, look out for biases in AI algorithms or the data sets used to train AI, as they could impact the quality and fairness of your communications.

2. Moderate risk: preliminary legal research

Many lawyers already use AI for legal research. Again, data privacy issues arise if an AI system accesses confidential or sensitive information during research. Bias in the training data can impact the accuracy and fairness of research outcomes.

You may be tempted to rely solely on AI-generated research. But a human must review all AI output, including research, or you risk being misled by AI “hallucinations,” as the lawyers fined for filing fake case law discovered.

3. Moderate-to-high risk: drafting initial legal documents

It’s not a matter of “if” but “when” and “how” AI tools process sensitive information to create documents and contracts. This raises the same data privacy and client confidentiality concerns as above.

It's not a matter of "if" but "when" and "how" AI tools process sensitive information to create documents and contracts.

Legal professionals must also review all AI-generated documents for accuracy and completeness to avoid errors or omissions that may have legal consequences. Ensure that AI-generated documents and contracts meet legal standards and comply with industry guidelines and regulations.

4. High risk: legal analysis and strategy

Using AI for legal analysis and strategy is a high-risk activity. Data privacy remains a concern. Hidden biases in AI algorithms and training data can skew analyses and strategic recommendations, negatively impacting outcomes. Ethically, you must push for your AI systems to work transparently and ensure you can validate the reasoning behind your legal analysis and strategy.

5. Very high risk: providing legal advice

Lawyers are accountable for the accuracy, reliability, and completeness of their legal advice, even when AI is involved. Thoroughly review AI-generated advice to ensure it meets professional standards and legal obligations. Combine caution with your legal expertise and experience to avoid being misled by AI hallucinations.

6. Highest risk: preparing court filings

Ethically, lawyers must ensure that AI-generated filings represent clients effectively and adhere to court rules. Verify the accuracy of AI-generated texts and confirm court and agency filings comply with requirements to avoid errors or omissions that could harm the company.

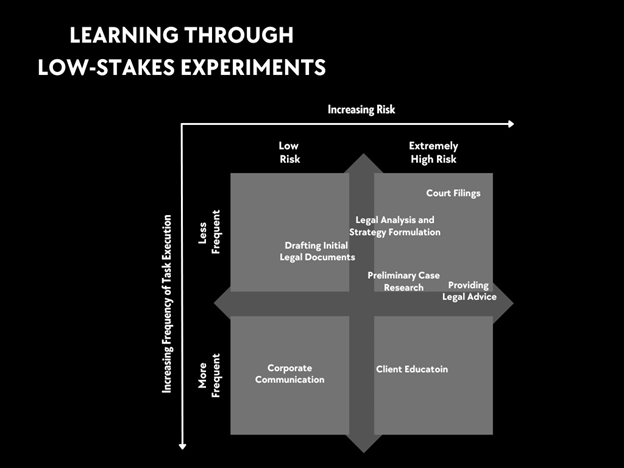

Consider other factors, such as frequency or impact of errors.

You can further evaluate the interplay of risk with additional factors, such as the activity’s frequency and the potential impact of negative consequences. The modified Ansoff's Quadrant analysis of legal tasks below reveals how.

Low-to-moderate risk, high-frequency tasks like content creation and corporate communications land in quadrants one and two. Lawyers rarely apply AI to low-risk and infrequent tasks, leaving quadrant three vacant.

Quadrant four includes preliminary case research, drafting initial legal documents, legal analysis, providing legal advice, and preparing court filings. Legal teams execute these higher-risk tasks less frequently due to their intricate nature and potential legal implications.

Your risk distribution may differ depending on your practice area and system workflows. Still, this risk continuum offers a reliable framework to help you organize and assess the legal risks associated with generative AI tools. And that takes you another step toward assuring the responsible use of AI in law.

Disclaimer: the information in any resource collected in this virtual library should not be construed as legal advice or legal opinion on specific facts and should not be considered representative of the views of its authors, its sponsors, and/or ACC. These resources are not intended as a definitive statement on the subject addressed. Rather, they are intended to serve as a tool providing practical advice and references for the busy in-house practitioner and other readers.